Authors: Chris Wendler, Veniamin Veselovsky, Giovanni Monea, and Robert West. Title: “Do Llamas Work in English? On the Latent Language of Multilingual Transformers.” Year: 2024

Summary

This research explores if multilingual models use English internally as a pivot language, examining how Llama-2 transformers process embeddings across layers. The findings suggest a bias towards English, raising questions about the inherent biases in such models.

Abstract

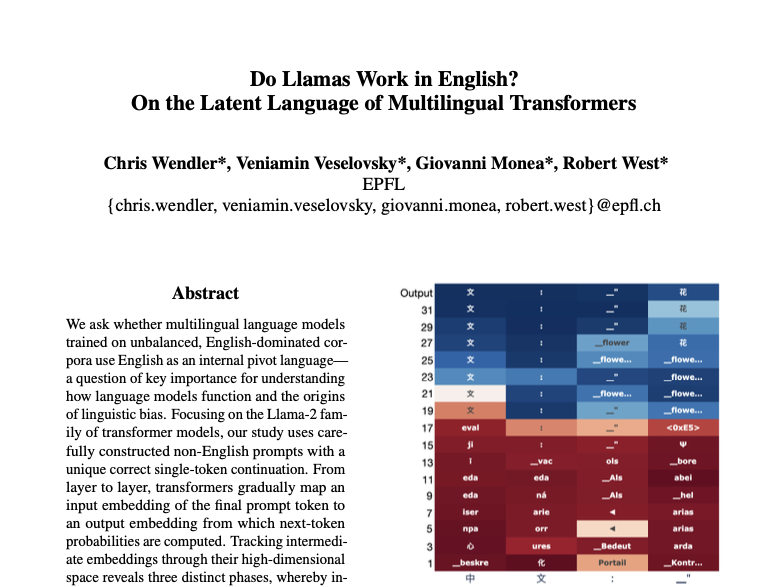

We ask whether multilingual language models trained on unbalanced, English-dominated corpora use English as an internal pivot language — a question of key importance for understanding how language models function and the origins of linguistic bias. Focusing on the Llama-2 family of transformer models, our study uses carefully constructed non-English prompts with a unique correct single-token continuation. From layer to layer, transformers gradually map an input embedding of the final prompt token to an output embedding from which next-token probabilities are computed. Tracking intermediate embeddings through their high-dimensional space reveals three distinct phases, whereby intermediate embeddings (1) start far away from output token embeddings; (2) already allow for decoding a semantically correct next token in middle layers, but give higher probability to its version in English than in the input language; (3) finally move into an input-language-specific region of the embedding space. We cast these results into a conceptual model where the three phases operate in “input space”, “concept space”, and “output space”, respectively. Crucially, our evidence suggests that the abstract “concept space” lies closer to English than to other languages, which may have important consequences regarding the biases held by multilingual language models. Code and data is made available here: https://github.com/ epfl-dlab/llm-latent-language.

Why should you read this paper?

This paper provides essential insights into the operational dynamics of multilingual transformers, shedding light on potential biases that could impact the fairness and effectiveness of language technologies globally.

Key Points

- Multilingual language models may use English as an implicit pivot language.

- The study tracks embeddings across layers, revealing a shift towards English before aligning with the target language.

- Such behavior suggests an inherent bias in how these models process non-English languages, potentially affecting their application and fairness.

Broader Context

The findings connect to broader discussions about AI fairness and the risk of perpetuating English-centric biases in global technologies. Understanding these biases is crucial for developing more equitable multilingual systems.

Q&A

- What is a pivot language? - A pivot language is an intermediary language used to facilitate translation or understanding between multiple other languages.

- How does the Llama-2 model process languages? - It processes embeddings through phases that initially bias towards English before aligning with the target language’s embeddings.

- Why is understanding this bias important? - It helps in assessing and mitigating potential fairness issues in AI applications across different languages.

Deep Dive

The study uses a technique called logit lens to analyze how token probabilities and embeddings evolve across layers, highlighting the initial preference for English embeddings even when the input is in another language.

Future Scenarios and Predictions

Future research could explore methods to neutralize the English bias in multilingual models, potentially leading to more balanced and fair AI systems across languages.

Inspiration Sparks

Consider designing a project that investigates the impact of inherent linguistic biases in AI on non-English speaking populations, using this study’s findings as a starting point.

Wendler, Chris, et al. “Do Llamas Work in English? On the Latent Language of Multilingual Transformers.” arXiv preprint arXiv:2402.10588 (2024).

For the full article please follow the link.