Recent research reveals a surprising fact about AI chatbots: even when we ask them something in another language, they think it over in English first. This finding comes from a deep dive into Llama 2, a popular AI model, by Chris Wendler and Veniamin Veselovsky’s team at the Swiss Federal Institute of Technology in Lausanne.

Why English?

The reason behind this English preference is simple: most of the data used to train these AI models is in English. Because of this, the AI learns to associate ideas and concepts primarily with English words and phrases.

How They Found Out

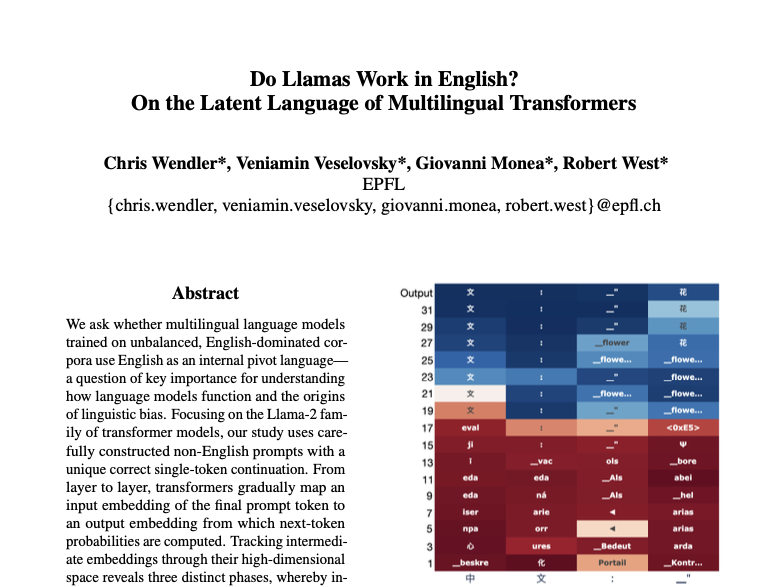

The team tested the AI with tasks in Chinese, French, German, and Russian. They discovered that, no matter the task, the AI’s internal workings briefly switched to English before coming up with an answer. This “English subspace” journey shows that English underpins the AI’s understanding, regardless of the language spoken to it.

Why It Matters

This reliance on English raises concerns. Experts like Aliya Bhatia and Carissa Véliz warn that it could narrow the AI’s world view, potentially losing the unique ideas and expressions found in other languages. Moreover, it might lead to AI making culturally irrelevant or incorrect decisions, particularly in critical areas like asylum applications.

Looking Forward

This insight into AI’s language processing urges the development of more inclusive AI systems. By diversifying the training data to include more languages and cultures, we can create AI that understands and communicates with the global population more effectively. In essence, ensuring AI can truly “speak” multiple languages involves more than just translating words; it’s about understanding and respecting the rich diversity of human thought and culture.

Research Article: Do Llamas Work in English? On the Latent Language of Multilingual Transformers C Wendler, V Veselovsky, G Monea, R West