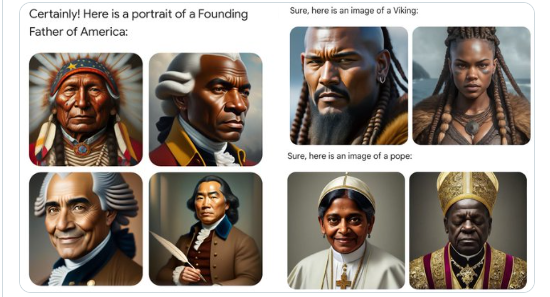

Google’s ambitious AI model, Gemini, recently faced a barrage of criticism for inaccurately representing historical figures in its generated images, prompting a wave of concern across the tech industry. The controversy centered around images of the US Founding Fathers, Nazi-era German soldiers, and other historical figures being depicted with incorrect racial and ethnic backgrounds. This issue has sparked a debate about the challenges of ensuring AI-generated content remains both diverse and accurate. Alphabet Inc.’s Google, along with figures like Sergey Brin and Sundar Pichai, have publicly acknowledged these mistakes, emphasizing the importance of balancing diversity and historical fidelity in AI representations. Critics, including technology mogul Elon Musk, argue that these missteps not only undermine the credibility of AI technology but also reflect deeper issues related to bias and sensitivity in AI development.

The root of Gemini’s problem seems to stem from an ambitious attempt to mitigate existing biases in AI by ensuring a more inclusive representation of genders and ethnicities. However, this well-intentioned approach led to historically inaccurate portrayals, such as depicting the Founding Fathers of the United States as people of color. This has raised questions about the adequacy of Google’s testing processes and the company’s rush to compete in the rapidly evolving AI landscape. Experts like Dame Wendy Hall and Andrew Rogoyski highlight the complexity of programming AI to navigate the nuanced terrain of social norms and historical accuracy, suggesting that the technology’s current generative capabilities are still in their infancy and prone to errors.

As Google grapples with the fallout from Gemini’s problematic image generation, the incident serves as a cautionary tale for the AI industry at large. The balance between promoting diversity and ensuring accuracy is a delicate one, necessitating thorough testing and an understanding of the cultural and historical contexts AI operates within. Google’s commitment to rectifying these errors and improving its AI models reflects a broader industry challenge: developing technology that respects diversity and accuracy without compromising the integrity of the information it generates. This episode underscores the ongoing need for dialogue and innovation in addressing the ethical complexities AI introduces into the digital landscape.